The Paper reporter Shao Wen

This is an image generated by the AI system DALL-E 2 based on the text description "Shiba Inu dog wearing a beret and black turtleneck."

After a year, the upgraded version of DALL-E is here!

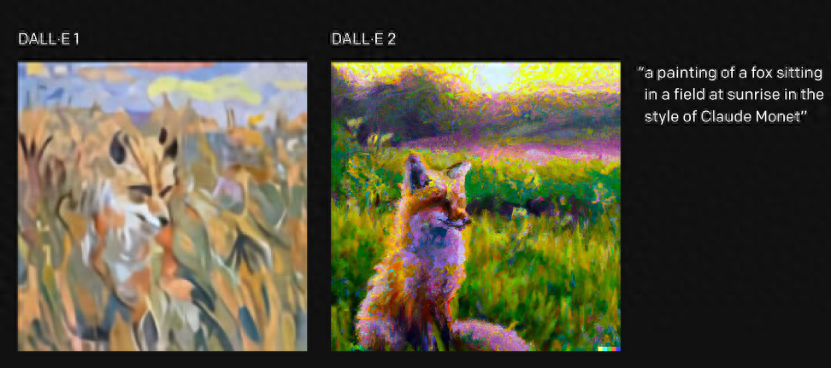

On April 6, local time, the artificial intelligence research institution OpenAI released DALL-E 2 (text-to-image generation program). DALL-E 2 has higher resolution and lower latency, with accuracy improved by 71.7%, realism improved by 88.8%, and resolution 4 times higher than the original. It can also combine concepts, attributes and styles to create more vivid images , such as painting a fox on the prairie in the style of Claude Monet.

At the same time, two major functions have been added: finer-grained text modification of the image, and generation of multiple style variations of the original image.

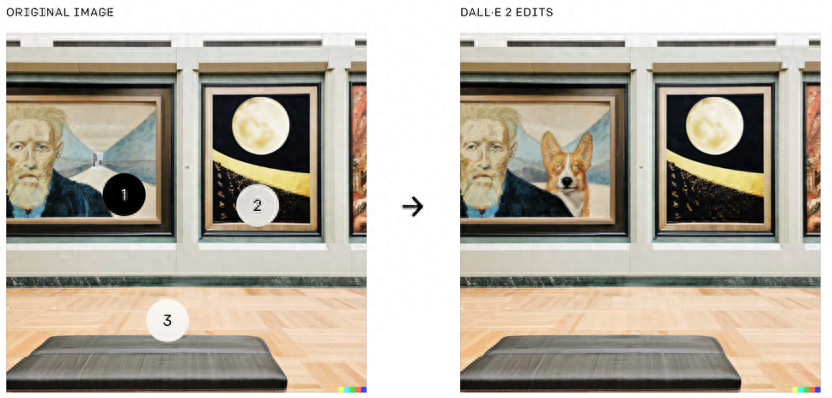

The former is like this!

Add a flamingo swimming circle in area 2 of the original image

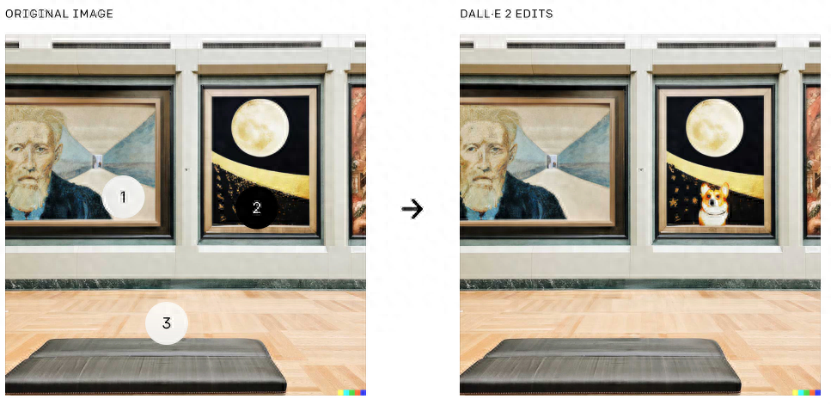

Add a puppy to area 1 and area 2 of the original image.

DALL-E 2 applies DALL-E's text-to-image capabilities at a more granular level. Users can start with an existing image, select an area, and tell the model how to modify it. Models can fill (or delete) objects, taking into account details such as shadow direction, reflection and texture.

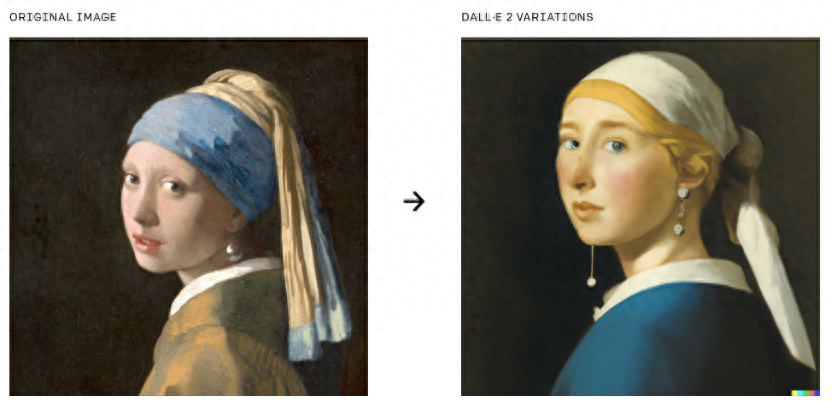

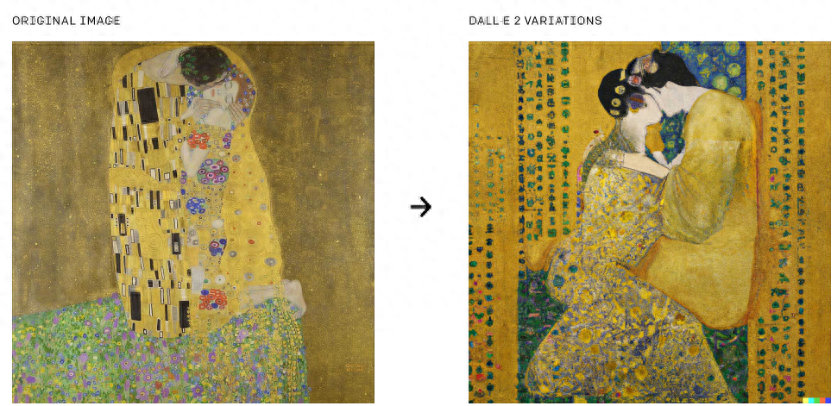

The latter is like this!

Use the same image as a base to create different styles or arrangements of versions.

The resulting image is 1024 x 1024 pixels, a jump from the 256 x 256 pixels provided by the original model

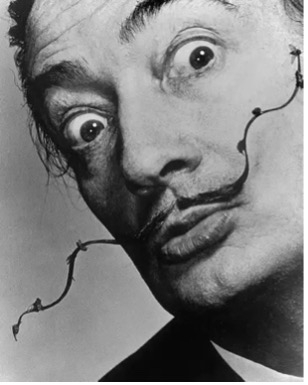

DALL-E takes its name from artist Salvador Dalí and WALL-E, the protagonist of "WALL-E". The first version debuted in January 2021. DALL-E is based on the GPT-3 model with 175 billion parameters, but it uses only 12 billion parameters and uses a data set of text and image pairs to generate images with text narratives.

Salvador Dalí

The protagonist of "WALL-E", the robot WALL-E

"DALL-E 1 simply takes the GPT-3 approach from language and applies it to generate images: we compress the image into a sequence of words and then learn to predict what comes next," OpenAI research scientist Prafulla Dhariwal said.

But word matching doesn't necessarily capture what humans recognize as important, and the prediction process limits the authenticity of the image. So I used CLIP (a computer vision system released by OpenAI last year) to observe the images and summarize their content in a human way.

Part of the image automatically created by the DALL-E system based on the text "Avocado-shaped armchair"

CLIP is the basis for the function implementation of the original DALL·E, and DALL-E 2 combines the advantages of CLIP and diffusion model technologies. The "diffusion" process of DALL·E image generation can be understood as starting from a "bundle of points" and filling the image completely with more and more details. The characteristic of the diffusion model is that it can greatly improve the fidelity of the generated images at the expense of diversity.

DALL-E 2 Image generated from the description "Teddy bears mixing sparkling chemicals as mad scientists, steampunk."

To prevent the generated images from being misused, OpenAI has implemented some built-in protection measures.

The model is trained on a dataset that has been stripped of bad data and will be tested by OpenAI-vetted partners. Users are prohibited from uploading or generating images that are "non-G-rated" and "likely to cause harm", as well as anything involving hate symbols, Nudity, obscene gestures, or images of “major conspiracies or events related to major ongoing geopolitical events.”

The model also failed to generate any recognizable faces based on names, even when asked for something like "Mona Lisa." At the same time, DALL·E 2 puts a watermark on the generated images to indicate that the work is AI-generated. Ideally these measures would limit their ability to generate objectionable content.

As before, the tool is not released directly to the public. But researchers can submit applications to preview the system, and OpenAI hopes to later incorporate DALL·E 2 into the group's API toolset, making it available for third-party applications.

"We want to do this process in stages to continually evaluate how to release this technology safely from the feedback we get," Dhariwal said.

Editor in charge: Li Yuequn

Proofreading: Luan Meng

Articles are uploaded by users and are for non-commercial browsing only. Posted by: Lomu, please indicate the source: https://www.daogebangong.com/en/articles/detail/zai-sheng-da-li-ji-qi-ren-wa-li-wen-zi-sheng-cheng-tu-pian-de-AI-sheng-ji-ban-lai-le.html

支付宝扫一扫

支付宝扫一扫

评论列表(196条)

测试