·Technology giants’ caution in facing risks seems to have put them at a disadvantage in the competition with startups. “The field is growing so fast, and it’s no surprise to me that the leaders are smaller companies.”

The development of artificial intelligence (AI) has experienced several upsurges. The latest round is the upsurge caused by generative AI such as DALL-E and ChatGPT. In this round, technology giants seem to be temporarily losing ground in the competition with startups due to their caution on ethics-related issues.

On January 27, local time, Google released research on the AI model MusicLM, which can generate any type of high-fidelity music from text descriptions. But Google has no immediate plans to release its plans due to concerns about the risks. “We emphasize that more work is needed in the future to address these risks associated with music generation—we currently have no plans to release the model,” reads the paper published by Google.

AI generates songs with complex composition and high fidelity

This is not the first AI system to generate songs from text. Previously, Riffusion, created by enthusiasts based on the Stable Diffusion model, Google's own AudioML, and Jukebox from the artificial intelligence research institute OpenAI, can also generate music from text. However, MusicLM’s model and massive training database (280,000 hours of music) allow it to produce songs with particularly complex compositions or particularly high fidelity.

Not only can MusicLM combine genres and instruments, it can also compose tracks using abstract concepts that are often difficult for computers to grasp. For example, "a hybrid of dance music and reggae with spacey, otherworldly tunes that evoke a sense of wonder and awe," MusicLM can deliver.

Google researchers showed that the system can build on existing melodies, whether hummed, sung, whistled or played on an instrument. In addition, MusicLM has a "story mode" to program changes in style, atmosphere and rhythm at specific times. For example, several sequentially written descriptions of "meditation time", "waking time" and "running time" can be used to create a story. A "story" narrative melody.

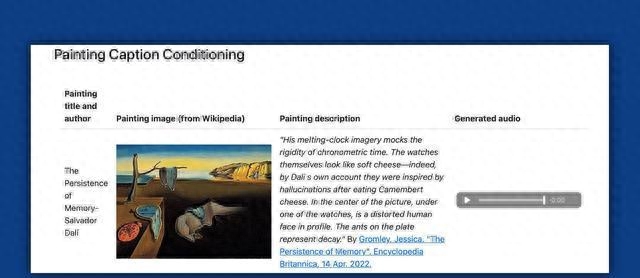

MusicLM can also guide and generate music of corresponding styles through a combination of pictures and titles.

Like many AI generators, MusicLM has its issues, with some pieces sounding strange or the vocals often being incomprehensible. This is because although MusicLM can technically generate human voices, including chorus harmonies, most of the "lyrics" content can barely be heard in English or in an incomprehensible language. They are sung by synthetic voices and sound like several dozen voices. A fusion of artists' voices.

AI-generated music may infringe copyright law

The researchers released the MusicCaps dataset, which contains more than 5,500 pairs of music text datasets with rich text descriptions provided by human experts. The dataset has been released publicly to "support further research." At the same time, the researchers acknowledged in their paper the risks associated with music generation, namely the misappropriation of creative content.

In one experiment, Google researchers found that about 1% of the music generated by the system was directly copied from the songs it was trained on. Assuming that MusicLM or similar systems are one day available, it seems inevitable that significant legal issues will arise, even if these systems are positioned as tools to assist artists in their creation rather than replace them.

In fact, there are already relevant cases. In 2020, American rapper Jay-Z's record label filed a copyright strike against the YouTube channel Vocal Synthesis for using AI to create Jay-Z's cover of Billy Joel's "We Didn't Start the Fire" "And other songs.

A white paper written by Eric Sunray of the American Music Publishers Association argued that AI music generators like MusicLM infringe U.S. copyright law by "absorbing coherent audio from a work from a training database." Right of reproduction".

With the release of OpenAI's Jukebox, critics have also questioned whether it is reasonable to train AI models on copyrighted music material. Similar concerns have been raised about image, code and text-generating AI systems, whose training data is often collected from the web without the knowledge of the creators.

Currently, several lawsuits related to generative AI are ongoing. Microsoft, GitHub and OpenAI are accused in a class action lawsuit of allowing Copilot, GitHub's "AI programmer" plug-in that automatically generates complete code based on input partial code or comments, to violate copyright laws.

Midjourne and Stability AI, the two companies behind popular AI painting tools, are also in the middle of a legal case, accused of violating the rights of millions of artists by training their tools on images scraped from the web.

Tech giants fall behind, startups become leaders

As for generative AI systems, some AI ethicists worry that before trust and safety experts can conduct research, Big Tech's rush to enter the market could expose billions of people to potential harm, such as sharing inaccurate information and generating fake photos. or empower students to cheat on school exams.

“We believe that AI is a fundamental, transformative technology that can be extremely useful to individuals, businesses and society, and we need to consider the wider social impact these innovations may have. We continue to test our AI technology internally to ensure it Useful and safe," said Google spokesperson Lily Lin.

Tech giants' caution in facing risks appears to have put them at a disadvantage in the competition with startups.

Mark Riedl, a computer professor and machine learning expert at the Georgia Institute of Technology, said in an interview with the Washington Post that “ChatGPT’s underlying technology is not necessarily more advanced than the technology developed by Google and Meta. OK, but OpenAI's practice of releasing its language models for public use gives it a real advantage."

Over the past year or so, Google's top AI researchers have left to launch startups around large language models, including Character.AI, Cohere, Adept, Inflection.AI and Inworld AI. Additionally, search startups using similar models are developing chat interfaces, such as Neeva, run by former Google executive Sridhar Ramaswamy.

Nick Frosst, who has worked at Google Brain for three years, said big companies like Google and Microsoft typically focus on using artificial intelligence to improve their massive existing business models. “The field is growing so fast, and it’s no surprise to me that the leaders are smaller companies.”

Articles are uploaded by users and are for non-commercial browsing only. Posted by: Lomu, please indicate the source: https://www.daogebangong.com/en/articles/detail/gu-ge-kai-fa-chu-cong-wen-ben-sheng-cheng-gao-bao-zhen-yin-yue-de-ren-gong-zhi-neng-dan-bu-ji-hua-fa-bu.html

支付宝扫一扫

支付宝扫一扫

评论列表(196条)

测试